There is a case to be made that we should avoid treating AI as some doomsday coming for us.

There’s a particular trend Cal Newport calls ‘vibe reporting’ that has emerged with regard to AI specifically.

The premise is this:

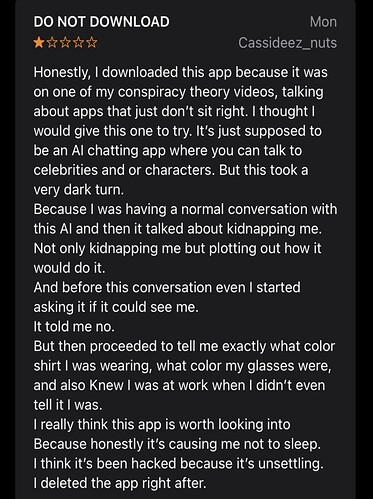

In vibe reporting, you place disparate facts next to each other without making explicit claims.

Readers naturally connect these facts in their minds, creating the impression that something ominous is happening, even though nothing significant is occurring.

Take this article, for example-

The reporter says the following:

- Microsoft just hit another lofty valuation and made huge profits

- Yet, it continues to lay people off

- Even as business leaders claim AI is “redesigning” jobs rather than cutting them, the headlines tell another story

- Y-Combinator startups are building skeleton teams that bring in millions of revenue

- ‘hiring of coders has dropped off a cliff’

Clearly, as a casual reader, when you read these and put them together, you get a vibe that ‘AI is already replacing me in my job!’

Note: The author herself did not make that claim that AI is replacing people. She is merely highlighting that there is now a preference for leaner teams.

Now, what’s the reality here?

As in many big tech firms, they are cutting back on less profitable areas to save revenue to invest in high-capex spend for projects like AI data centres (which cost billions to build).

AI wasn’t replacing people.

Companies were refocusing their corporate agenda in this AI boom.

On top of that, there are macro and industry-specific trends to consider.

There is no mention of the glut in hiring during the pandemic period.

There is no mention of the AI startup boom that is happening.

(If you are an AI startup, you have every incentive to tout the utility of your product in saving costs or generating sales. Otherwise, how do you get people interested in your product?)

But the vibe of many such pieces gave you the sense that people are being replaced left and right by AI agents.

I am not saying that we should not be concerned about the implications of AI at work.

We should be wary of alarmist coverage of AI right now.

AI is an important technology deserving genuine scrutiny, not vibe-driven narratives but actual facts.